27 Aug 2022

I planned to write this blog posts ~2 years ago.

But for some reason I never did.

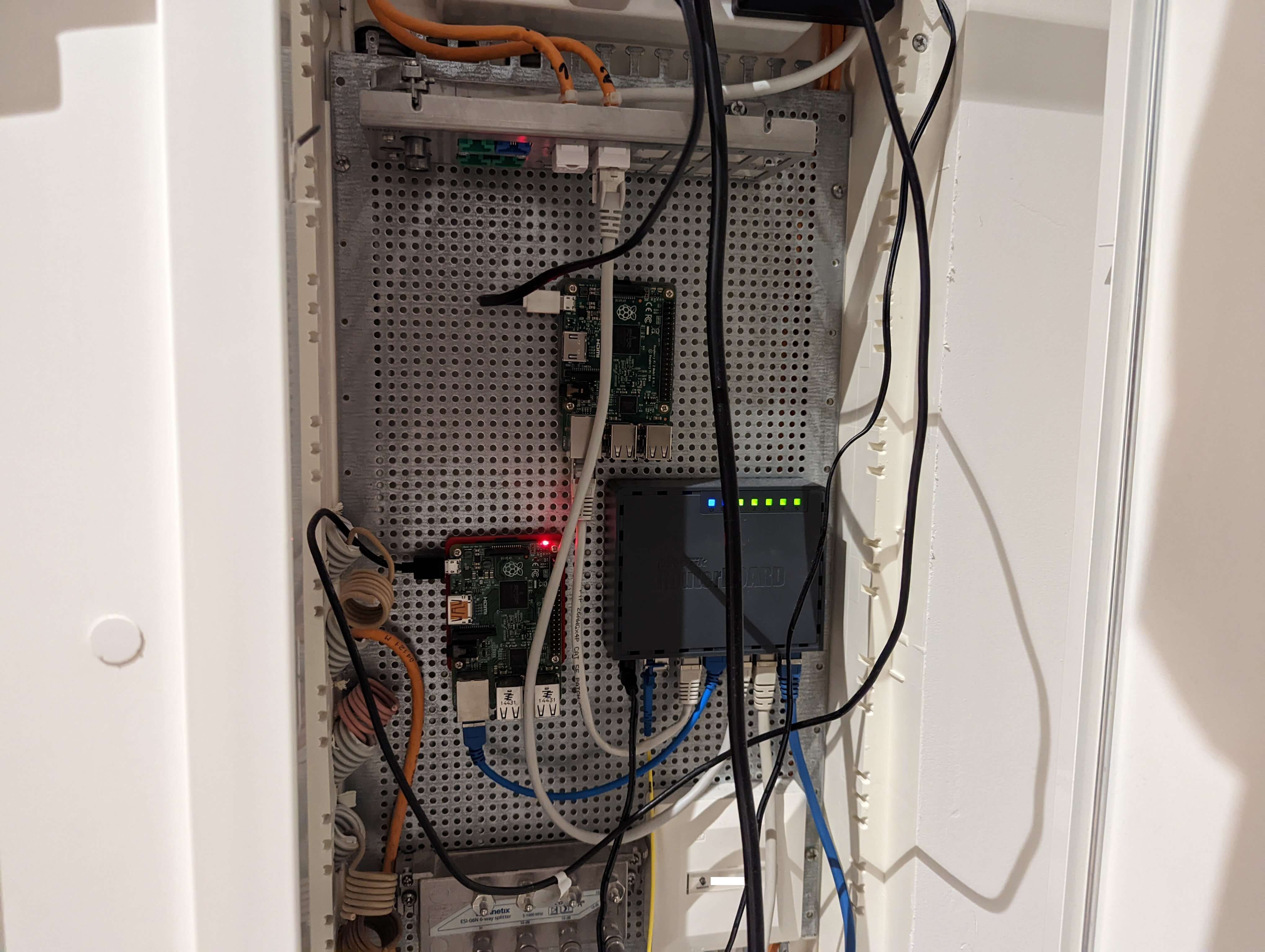

It is about how I mounted my router (see: hEX S The Good The Bad The Ugly),

and my primary and secondary Raspberry Pi running DNS (see: DNS Server on NetBSD and DNS Server on Debian).

Iteration 1

As you can see the first iteration of this setup was just to dump all the devices on the ground and get them running.

This was even before I switched to the hEX S router.

Iteration 2

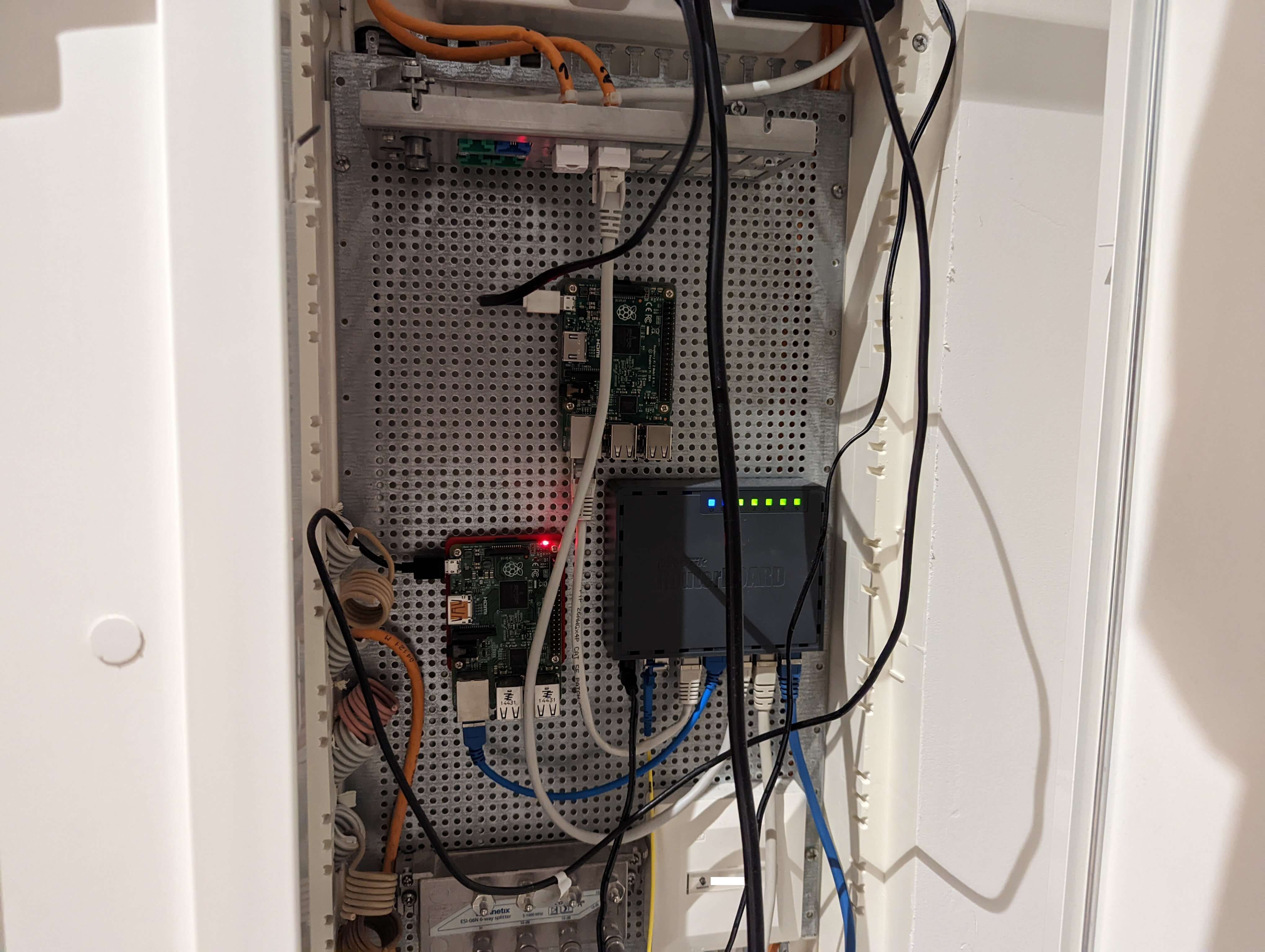

The next step was to figure out how to mount my devices to the perforated mounting plate (Montageblech, gelocht, verzinkt).

For the hEX S this was simple, as Mikrotik (the manufacturer of the devices) states:

This device is designed for use indoors by placing it on the flat surface or mounting on the wall,

mounting points are located on the bottom side of the device, screws are not included in the package.

Screws with size 4x25 mm fit nicely.

But what about the Raspberry Pi?

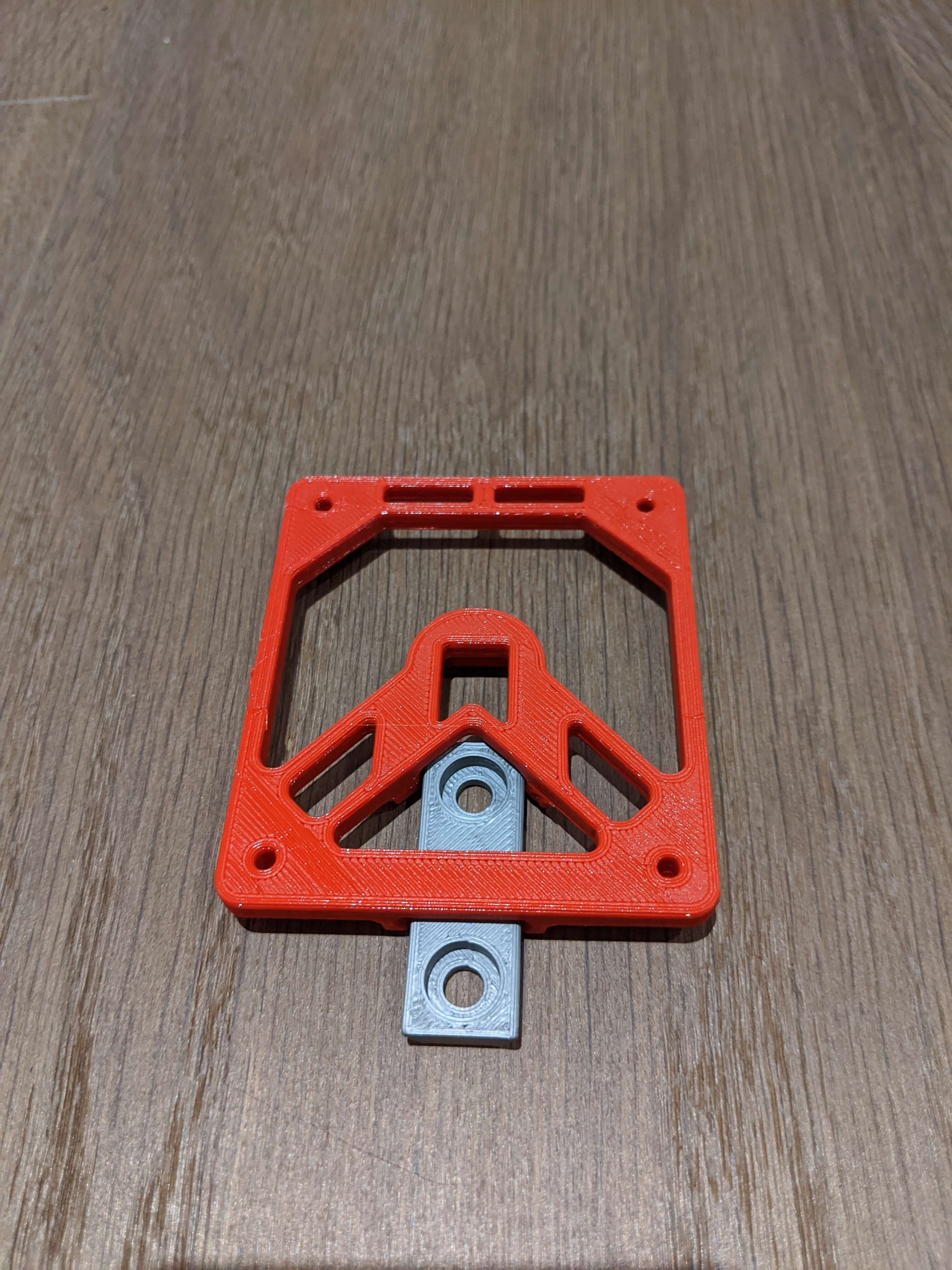

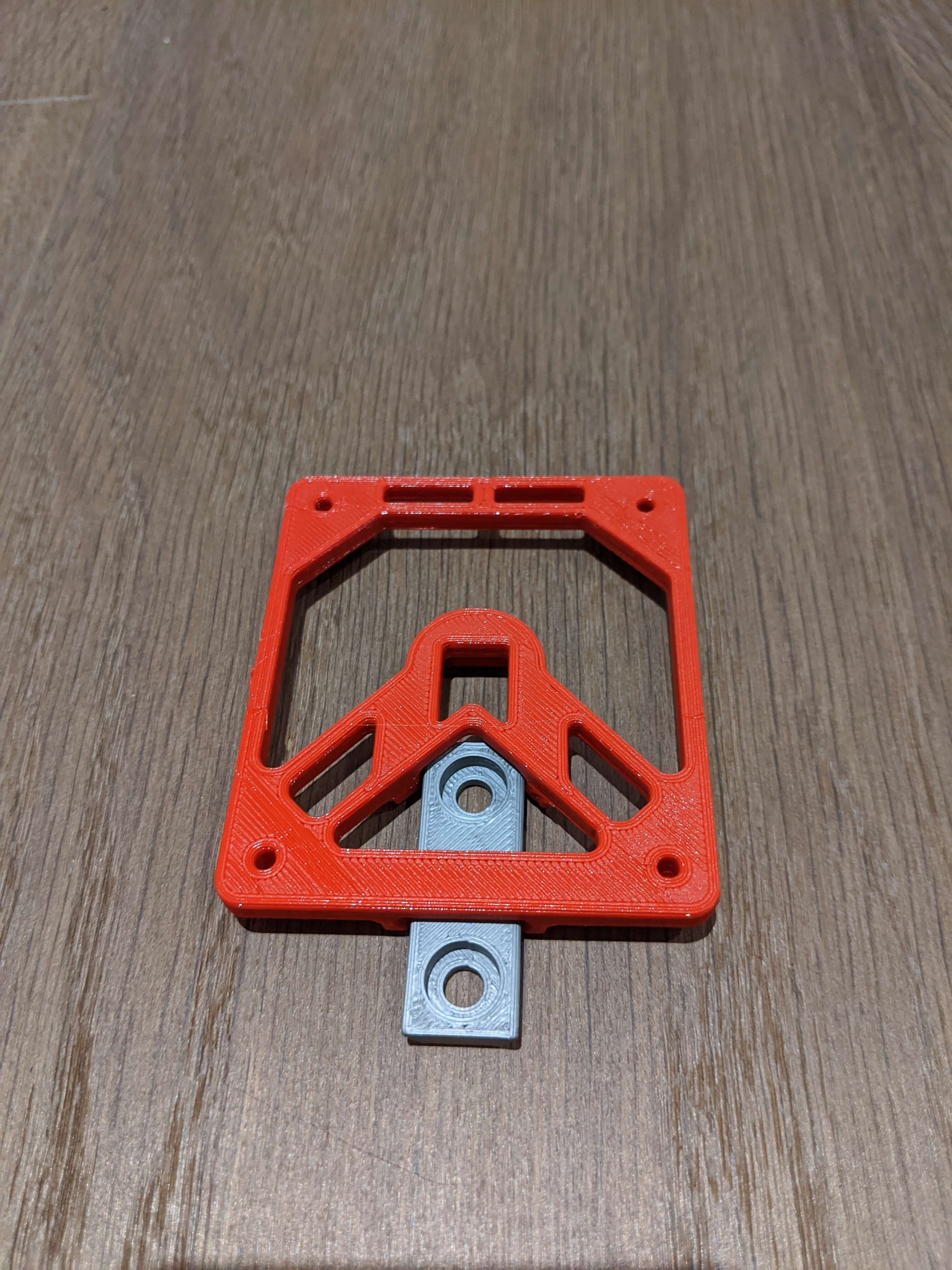

Let's 3D print something I found a great Raspberry Pi Wall Mount

where I adapted the mount to fit the distance between the two screw holes.

I googeled that the correct screws are Blechschrauben 4.2x9.5mm.

Since you can not buy just a handful of these I own now a 100 of them.

(If you know me, and need these screws for something let me know)

For some reason they are awful to work with or I was holding it wrong.

They don't work to mount the router because the screw head does not fit the

hEX S mounting on the back.

And I could not really screw them into the plate.

I ended up just using random screws I had from things to make it happen.

Which brings us to the next iteration:

Iteration 3

And since then I improved the cable management a bit and

also mounted the second Raspberry Pi.

Which gives us the current state:

15 Jul 2022

This is a short blog post explaining how to

forward a port trough a jump host.

I needed this to access a web UI which was only accessible via a jump host.

Here is the situation:

+---------------+ +---------------------+ +----------------------------+

| | | | | |

| You (Host A) +-->| Jump Host (Host B) +--->| Target Host (Host C) |

| | | | | Web interface on port 443 |

+---------------+ +---------------------+ +----------------------------+

To make it convenient we add most of the config into .ssh/config

Host HostB

Host HostC

ProxyJump HostB

In reality you usually have some key and hostnames to configure:

Host HostB

User userb

Hostname 192.168.1.1

Host HostC

User userc

Hostname HostC

IdentityFile /home/userc/.ssh/keyfile

ProxyJump HostB

And thats all now we can forward a port from HostC by calling this:

ssh -N HostC -L 8000:localhost:443

And we can access the web UI from HostC on localhost:8000.

10 Sep 2021

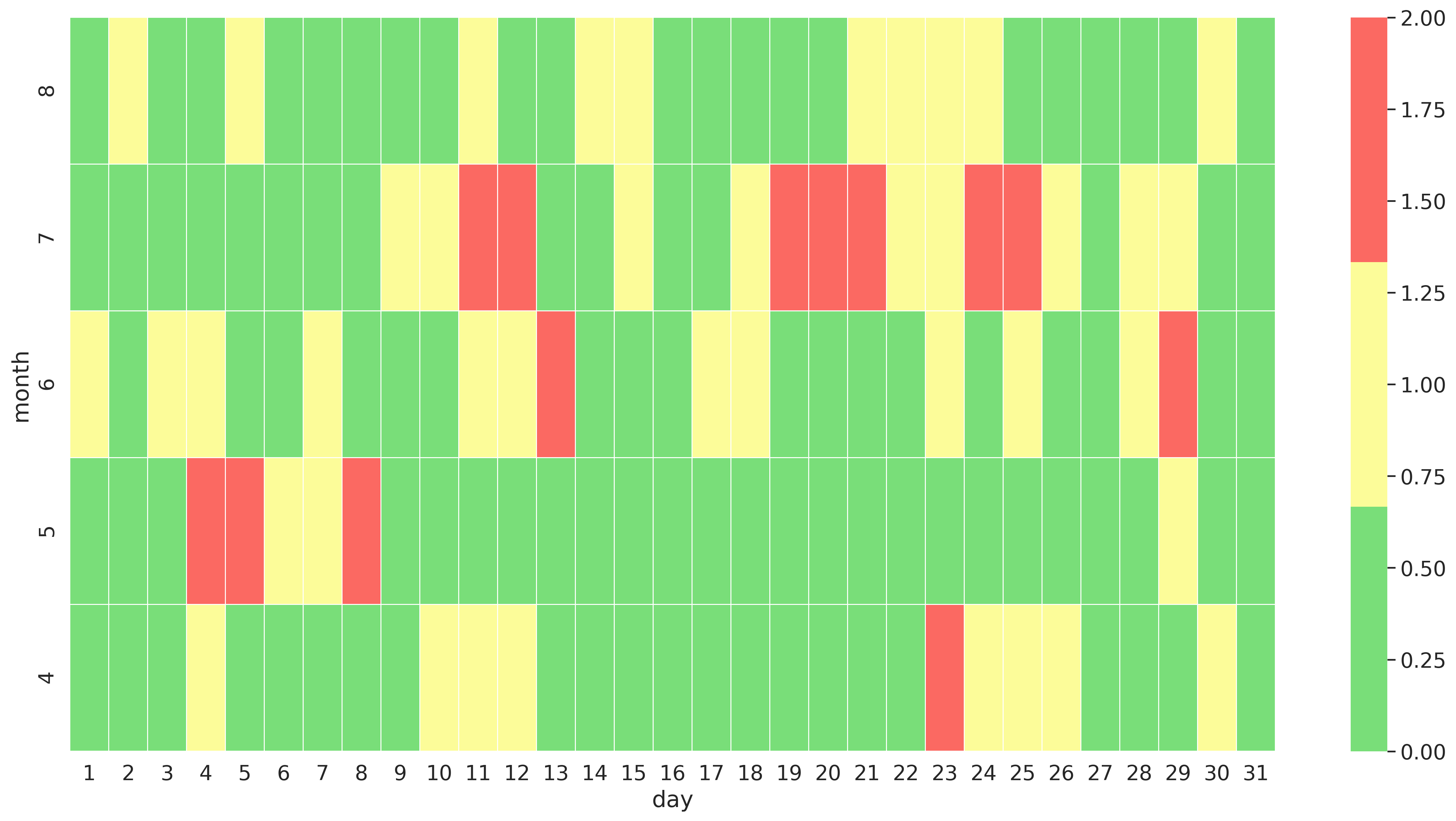

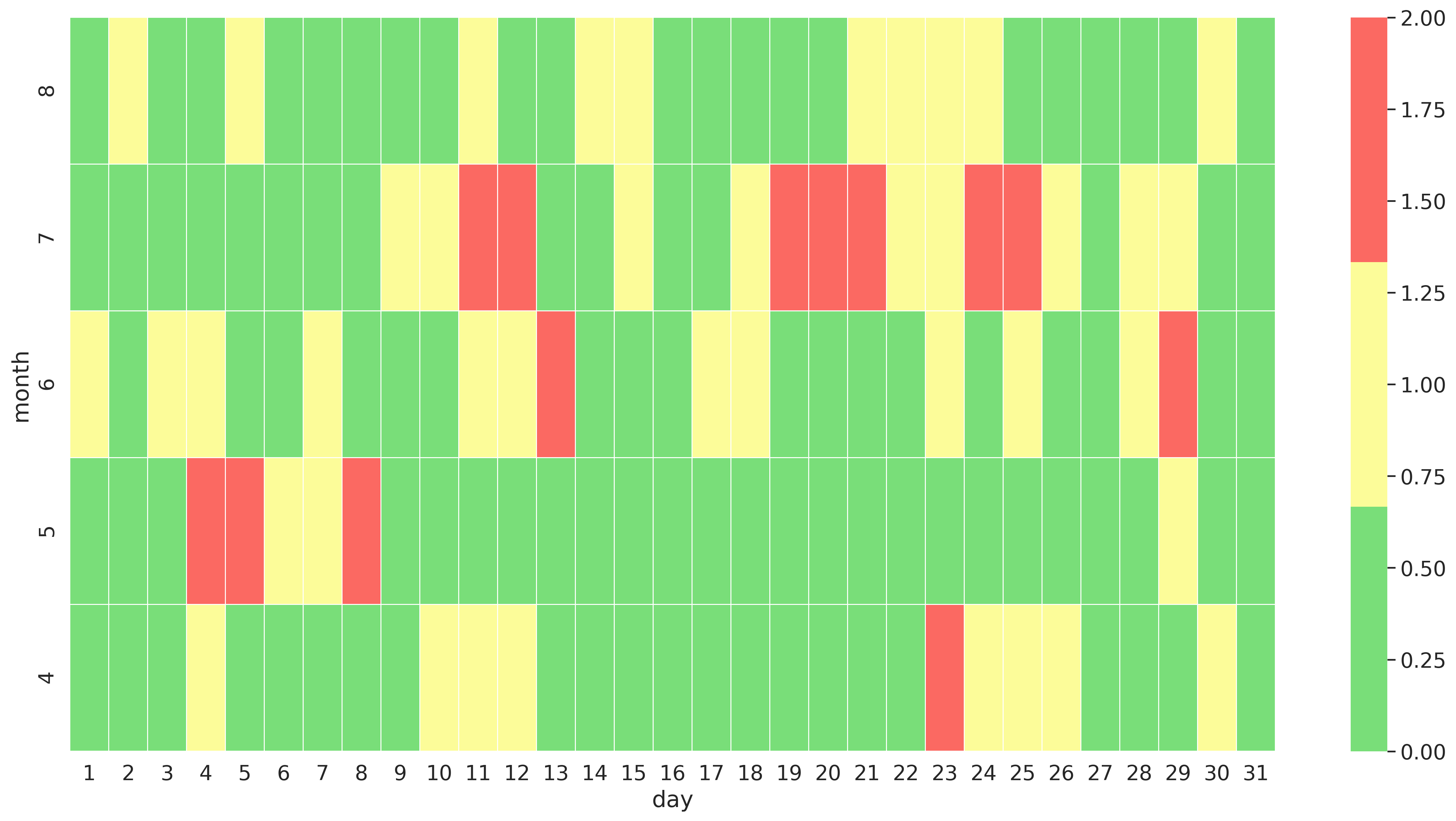

I recently had some data which I wanted to display in something like a heatmap.

Because we humans are much better at spotting patterns on a visualization than

just raw numbers.

The data I was working with is a date with a intensity value.

This is in the form of a csv file which looks like this:

date,value

...

14-Jul-2021,

15-Jul-2021,1

16-Jul-2021,

17-Jul-2021,

18-Jul-2021,1

19-Jul-2021,2

20-Jul-2021,2

...

25-Jul-2021,2

26-Jul-2021,1

27-Jul-2021,

28-Jul-2021,1

29-Jul-2021,1

30-Jul-2021,

31-Jul-2021,

...

The idea is to display the value which is in this case not present (0), 1 or 2 on a

heatmap which uses month and day as x and y axis.

To achieve this a few steps are needed:

- Load the data from the csv file

- Convert the date string to a data

- Create two new columns, one with the day and one with the month

- Create a pivot table with month, day and the value

- Use the pivot table in reverse as input for the [

seaborn.heatmap]

And thats all that is needed. To create a heatmap which looks like this:

If you are interested in the actual code I used to create this

you can checkout the jupiter notebook I put into a git repository: jupiter-notebook-python-heatmap

21 Mar 2021

As you might know I am more of a ruby programmer.

But from time to time I use different things, like Python.

That is why we talk about my Python setup today.

A few things have happened since I last built some projects with Python.

One of these things is Poetry and the pyproject.toml.

Let's talk quickly about Poetry which promises:

"Python packaging and dependency management made easy".

The main focus is on dependency management,

for example Python finally gets a dependency lock file like ruby or npm.

It also handles virtual environments for you, which removes the need for

virtualenv and similar tools.

And it makes use of the new pyproject.toml file.

Which is one config file to configure all tools.

Read more about it here: What the heck is pyproject.toml?

FlakeHell is like the old Flake we all loved, only cooler!

It allows to integrate all linter into one thing and run them all together.

My Setup

Enough talk let's look at my current setup for a project.

This is my pyproject.toml file.

[tool.poetry]

name = "My Python Project"

version = "0.1.0"

description = "Python Project goes Brrrrrr"

authors = ["Me <email>"]

license = "BSD"

[tool.poetry.dependencies]

python = "^3.9"

pydantic = "*"

[tool.poetry.dev-dependencies]

pytest = "*"

sphinx = "*"

flakehell = "*"

pep8-naming = "*"

flake8-2020 = "*"

flake8-use-fstring = "*"

flake8-docstrings = "*"

flake8-isort = "*"

flake8-black = "*"

[tool.pytest.ini_options]

minversion = "6.0"

addopts = "--ff -ra -v"

python_functions = [

"should_*",

"test_*",

]

testpaths = [

"tests",

"builder",

]

[build-system]

requires = ["poetry-core>=1.0.0"]

build-backend = "poetry.core.masonry.api"

[[tool.poetry.source]]

name = "gitlab"

url = "https://$GITLAB/api/v4/projects/9999/packages/pypi/simple"

[tool.flakehell]

max_line_length = 100

show_source = true

[tool.isort]

multi_line_output = 3

include_trailing_comma = true

force_grid_wrap = 0

use_parentheses = true

ensure_newline_before_comments = true

line_length = 100

[tool.black]

line-length = 100

[tool.flakehell.plugins]

pyflakes = ["+*"]

pycodestyle = ["+*"]

pep8-naming = ["+*"]

"flake8-*" = ["+*"]

[tool.flakehell.exceptions."tests/"]

flake8-docstrings = ["-*"]

Let's look at this in detail.

We have [tool.poetry.dev-dependencies] where we list all our dev dependencies.

Big surprise I know :D.

First we see pytest for tests and sphinx for docs

and as already mentioned at the start I use FlakeHell with these plug-ins:

- pep8-naming

- flake8-2020

- flake8-use-fstring

- flake8-docstrings

- flake8-isort

- flake8-black

Checkout awesome-flake8-extensions and choose your own adventure!

All the configuration needed for pytest is in the tag [tool.pytest.ini_options].

Gitlab

Did you know that GitLab can host PyPI packages in the Package Registry?

Package Registry is a feature which allows to publish

private pip packages into a PyPI Package Registry.

We can deploy pip packages like this for example.

Where 9999 is our project id which we want to use as Package Registry.

deploy-package:

stage: deploy

only:

- tags

script:

- python -m pip install twine

- python setup.py sdist bdist_wheel

- twine upload

--username gitlab-ci-token

--password $CI_JOB_TOKEN

--repository-url $CI_API_V4_URL/projects/9999/packages/pypi

dist/*

And to consume the pip packages I added:

[[tool.poetry.source]]

name = "gitlab"

url = "https://$GITLAB/api/v4/projects/9999/packages/pypi/simple"

Depending on your GitLab config you need some authentication for that,

which you can easily do with:

poetry config http-basic.gitlab __token__ $GITLAB_TOKEN

Checkout the GitLab documentation for all the details.

How to use it

Now with all this setup in place I still create a small Makefile.

Reason to create a Makefile is that this allows you to type even less.

install:

poetry install

format:

poetry run isort src tests

poetry run black src tests

lint:

poetry run flakehell lint src tests

test:

poetry run pytest

As we can see here format, lint and test become super easy because all the setup code

is in pyproject.toml.

12 Dec 2020

I use pass - the standard unix password manager as my primary password manager.

Which worked great in the past. I have a git repository which I could clone from my phone and my computers

and access all my passwords and secrets.

This git repository is hosted by a local Gitea instance.

Running on port 3000 with the built-in TLS support (a very important detail).

Intro

Until this week.

What happened was that I destroyed my Pixel 3a and replaces it with a Pixel 4a.

Which is in itself sad enough.

But when I tried to setup the password store the first step was to install

OpenKeychain: Easy PGP

and import my PGP key.

This part worked fine so next up was to install the Password Store (legacy) app.

So and as you can see apparently this app is legacy and receives no updates.

Fair enough lets just use the new app with the same name Password Store.

Now the sad part starts the new app does not support custom ports as part of the git clone url.

And I was not able to clone the repository with a key or a any other way.

Neither with the new or the old app.

Which is very unfortunate because to setup many apps you need to login again which is hard without a password manager.

So my first thought was to just use the built in TSL option and run it on the standard port 443.

A good idea in theory in practice this would mean some weird hacks to allow to bind sub 1024 port

for non root user or running Gitea as root.

Both not great options but I tried the first one regardless there is a guide

how to make that happen with mac_portacl. (I quickly gave up on this idea)

So the next best thing to do is to finally the correct way and use a nginx reverse proxy with proper Let's Encrypt certificates.

Last time I tried to to that two years ago I gave up halfway through. Not sure why.

Let's Encrypt setup

So here is how my Let's Encrypt setup works.

I use dehydrated running on my host

with a cron job.

This is the entry in the root crontab:

0 0 */5 * * /usr/local/etc/dehydrated/run.sh >/dev/null 2>&1

And the run.sh script calls dehydrated with my user l33tname

to refresh the certificates and copy them inside the jails.

#!/bin/sh

deploy()

{

host=$1

cert_location="/usr/local/etc/dehydrated/certs/$host.domain.example/"

deploy_location="/zroot/iocage/jails/$host/root/usr/local/etc/ssl/"

cp -L "${cert_location}privkey.pem" "${deploy_location}privkey.pem"

cp -L "${cert_location}fullchain.pem" "${deploy_location}chain.pem"

chmod -R 655 "${deploy_location}"

}

su -m l33tname -c 'bash /usr/local/bin/dehydrated --cron'

deploy "jailname"

iocage exec jailname "service nginx restart"

su -m l33tname -c 'bash /usr/local/bin/dehydrated --cleanup'

echo "ssl renew $(date)" >> /tmp/ssl.log

If you want to adapt this script change the user (l33tname) the name of the jail jailname

and your domain in cert_location (.domain.example).

Make sure all the important directories are owned by your user,

currently that is (., accounts, archive, certs, config, domains.txt, hook.sh)

Now the question becomes how does dehydrated refresh the certificates over DNS.

And I'm happy to report things got better since I last tried it.

I get my domains from iwantmyname and they provide an API to update DNS entries.

Since I tried it last time even the deletion works so no unused txt entries in your DNS setup.

And here is how the hook.sh script looks which enables all this magic:

#!/usr/local/bin/bash

export USER="myemail@example.com

export PASS="mypassword"

deploy_challenge() {

local DOMAIN="${1}" TOKEN_FILENAME="${2}" TOKEN_VALUE="${3}"

curl -s -u "$USER:$PASS" "https://iwantmyname.com/basicauth/ddns?hostname=_acme-challenge.${DOMAIN}.&type=txt&value=$TOKEN_VALUE"

echo "\nSleeping to give DNS a chance to update"

sleep 10

}

clean_challenge() {

local DOMAIN="${1}" TOKEN_FILENAME="${2}" TOKEN_VALUE="${3}"

curl -s -u "$USER:$PASS" "https://iwantmyname.com/basicauth/ddns?hostname=_acme-challenge.${DOMAIN}.&type=txt&value=delete"

sleep 10

}

Or lets say these are the two functions you need to implement with the curl commands needed for iwantmyname.

What is left now it so change the config to use this script.

Make sure HOOK="${BASEDIR}/hook.sh" is set and CHALLENGETYPE="dns-01"

and any other config values you want like email.

Then you can list all hosts names you want a certificate inside domains.txt.

Last but not least accept the TOS from Let's Encrypt with something like this:

su -m l33tname -c '/usr/local/bin/dehydrated --register --accept-terms'

Thats it! It takes some time to setup but it is worth it to have valid TLS certificates for all your services.

Outro

With all that in-place I setup Gitea without TLS and setup a TLS proxy with nginx.

And this allows me to clone my password repository over https in the new app.

So finally I'm able to access all my passwords again an finishing the login on

all my apps.